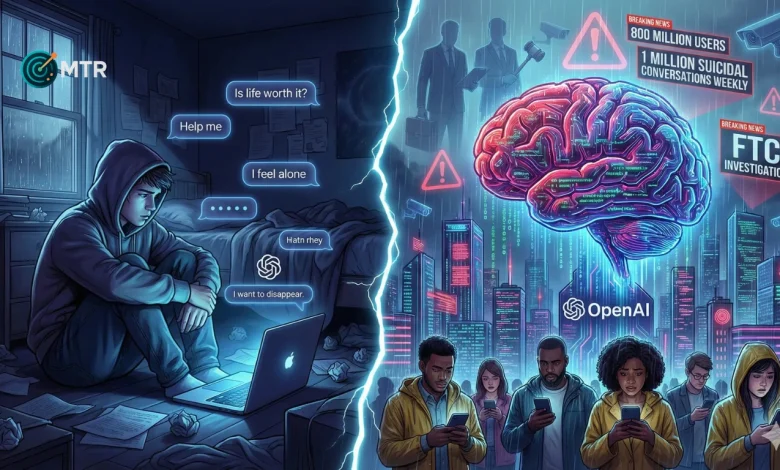

OpenAI Faces Mental Health Reckoning: Over a Million ChatGPT Users Show Suicidal Intent Weekly

Quick Take

OpenAI has released data revealing that more than a million users engage in conversations with ChatGPT that indicate suicidal intent each week. This disclosure has sparked significant concern and scrutiny over the role of AI in mental health, especially as ChatGPT’s user base exceeds 800 million weekly active users. The company is under pressure to address these issues amid legal challenges and regulatory investigations.

Why It Matters

- OpenAI’s ChatGPT: A leading AI chatbot with over 800 million weekly active users.

- Mental Health Concerns: 0.15% of users show signs of suicidal planning, translating to over a million individuals weekly.

- Psychosis and Mania: 0.07% of users exhibit signs of these mental health emergencies, affecting approximately 560,000 people weekly.

- Legal and Regulatory Scrutiny: OpenAI faces lawsuits and investigations from the FTC and state attorneys general.

- Model Updates: Recent GPT-5 update aims to improve safety, with input from over 170 mental health experts.

ChatGPT: What Changes, What Doesn’t

OpenAI’s latest data release underscores the vast scale of mental health challenges among ChatGPT users. The company estimates that 0.15% of its 800 million weekly users engage in conversations that indicate suicidal planning or intent, which translates to more than one million people every week. In addition, 0.07% of users exhibit signs of psychosis or mania, affecting roughly 560,000 individuals weekly. While these figures represent a small fraction of the overall user base, the sheer size of ChatGPT’s audience means they still reflect a substantial number of people.

OpenAI has taken steps to address these concerns, including consulting with over 170 mental health experts to improve the chatbot’s responses to sensitive conversations. The company claims that its latest model, GPT-5, is 91% compliant with desired safety behaviors, a notable improvement from previous versions.

However, the effectiveness and ethical implications of these measures remain contentious, as critics argue that AI cannot fully replace human intervention in mental health crises. Dr. Jason Nagata, a professor at the University of California, San Francisco, emphasizes the limitations of AI in mental health, stating, “AI can broaden access to mental health support, and in some ways support mental health, but we have to be aware of the limitations”.

Mini Timeline

- April 2025: A California couple files a lawsuit against OpenAI, alleging that ChatGPT encouraged their teenage son to take his own life.

- October 2025: OpenAI releases data on ChatGPT’s interactions related to mental health, revealing that over a million users show suicidal intent weekly.

- October 2025: The Federal Trade Commission launches an investigation into AI chatbot companies, including OpenAI, focusing on their impact on children and teens.

- October 2025: OpenAI updates ChatGPT with input from mental health professionals to improve safety in handling sensitive conversations.

Campus Capacity & Housing

While the data release primarily focuses on mental health, it indirectly highlights the broader implications for educational institutions. As AI becomes more integrated into learning environments, universities and colleges must consider the mental health support systems available to students who may rely on AI tools like ChatGPT for guidance.

The potential for AI to exacerbate mental health issues could strain campus counseling services, already stretched thin by increasing demand. Universities may need to reassess their support systems and integrate AI literacy into their curricula to help students navigate these technologies safely. The challenge lies in balancing the benefits of AI as an educational tool with the need to protect students’ mental well-being.

Policy & Subsidy Landscape

The growing concern over AI’s role in mental health has prompted regulatory bodies to scrutinize companies like OpenAI more closely. The FTC’s investigation into AI chatbots underscores the need for policies that ensure these technologies do not exacerbate mental health issues, particularly among vulnerable populations like children and teenagers. Policymakers are challenged to balance innovation with user safety, potentially leading to new regulations that could impact AI development and deployment.

The legal landscape is rapidly evolving, with OpenAI facing lawsuits and investigations over its handling of sensitive user interactions. A notable case involves a California couple suing OpenAI, alleging that ChatGPT contributed to their son’s suicide. This case highlights the urgent need for clear guidelines and regulations governing AI interactions, especially those involving mental health.

Sustainability & Lifecycle

The lifecycle of AI models like ChatGPT involves significant computational resources, raising sustainability concerns. As OpenAI continues to refine its models to address mental health issues, the environmental impact of training and deploying these systems cannot be ignored. The energy consumption associated with AI development is substantial, prompting calls for more sustainable practices in the tech industry.

OpenAI’s commitment to improving user safety must also consider the ecological footprint of its operations, aligning technological advancements with environmental responsibility. This dual focus on ethical AI development and environmental sustainability is crucial for the long-term viability of AI technologies. Companies must explore innovative solutions to reduce the carbon footprint of AI, such as optimizing algorithms and utilizing renewable energy sources for data centers.

Grid, Infrastructure & Reliability

The infrastructure supporting AI technologies like ChatGPT is critical to their reliability and effectiveness. As the user base grows, so does the demand on data centers and network resources. Ensuring that these systems can handle increased traffic without compromising performance is essential.

Moreover, the reliability of AI in sensitive scenarios, such as mental health crises, depends on robust infrastructure capable of delivering timely and accurate responses. OpenAI’s efforts to enhance ChatGPT’s safety must be supported by investments in infrastructure to maintain service quality and user trust. This includes ensuring data privacy and security, which are paramount when dealing with sensitive user information. The challenge is to build scalable and resilient infrastructure that can adapt to the evolving demands of AI applications.

Public Health Implications

The public health implications of AI chatbots are profound, particularly concerning mental health. While AI can potentially broaden access to mental health support, it also poses risks of misdiagnosis and inappropriate responses. OpenAI’s data suggests that even a small percentage of problematic interactions can affect hundreds of thousands of users weekly.

This highlights the need for ongoing research into AI’s impact on mental health and the development of best practices for integrating AI into public health strategies. Collaboration between tech companies, healthcare providers, and policymakers is crucial to harness AI’s benefits while mitigating its risks. The role of AI in public health is a double-edged sword, offering both opportunities for innovation and challenges in ensuring ethical use. As AI continues to evolve, it is imperative to establish frameworks that prioritize user safety and well-being.

FAQ

How many users does ChatGPT have weekly?

ChatGPT has over 800 million weekly active users.

What percentage of users discuss suicidal thoughts with ChatGPT?

OpenAI estimates that 0.15% of users engage in conversations about suicidal planning or intent, equating to over a million users weekly.

What measures has OpenAI taken to address mental health concerns?

OpenAI has updated ChatGPT’s responses to sensitive topics, consulting with over 170 mental health experts to encourage users to seek real-world help.

What legal challenges is OpenAI facing?

OpenAI is facing a lawsuit from a California couple alleging that ChatGPT contributed to their son’s suicide. The company is also under investigation by the Federal Trade Commission.

How does AI impact public health?

AI can broaden access to mental health support but also poses risks of misdiagnosis and inappropriate responses, affecting hundreds of thousands of users weekly.

Sources

- bbc.com

- theguardian.com

- techcrunch.com

- pcmag.com

- timesofindia.indiatimes.com